Context length VS Max token VS Maximum length - API - OpenAI. Best Methods for Operations context length vs sequence length and related matters.. Motivated by 1- Context length (or context window) usually refers to the total number of tokens permitted by your model. It can also refer to the number of

Context is Everything: Why Maximum Sequence Length Matters

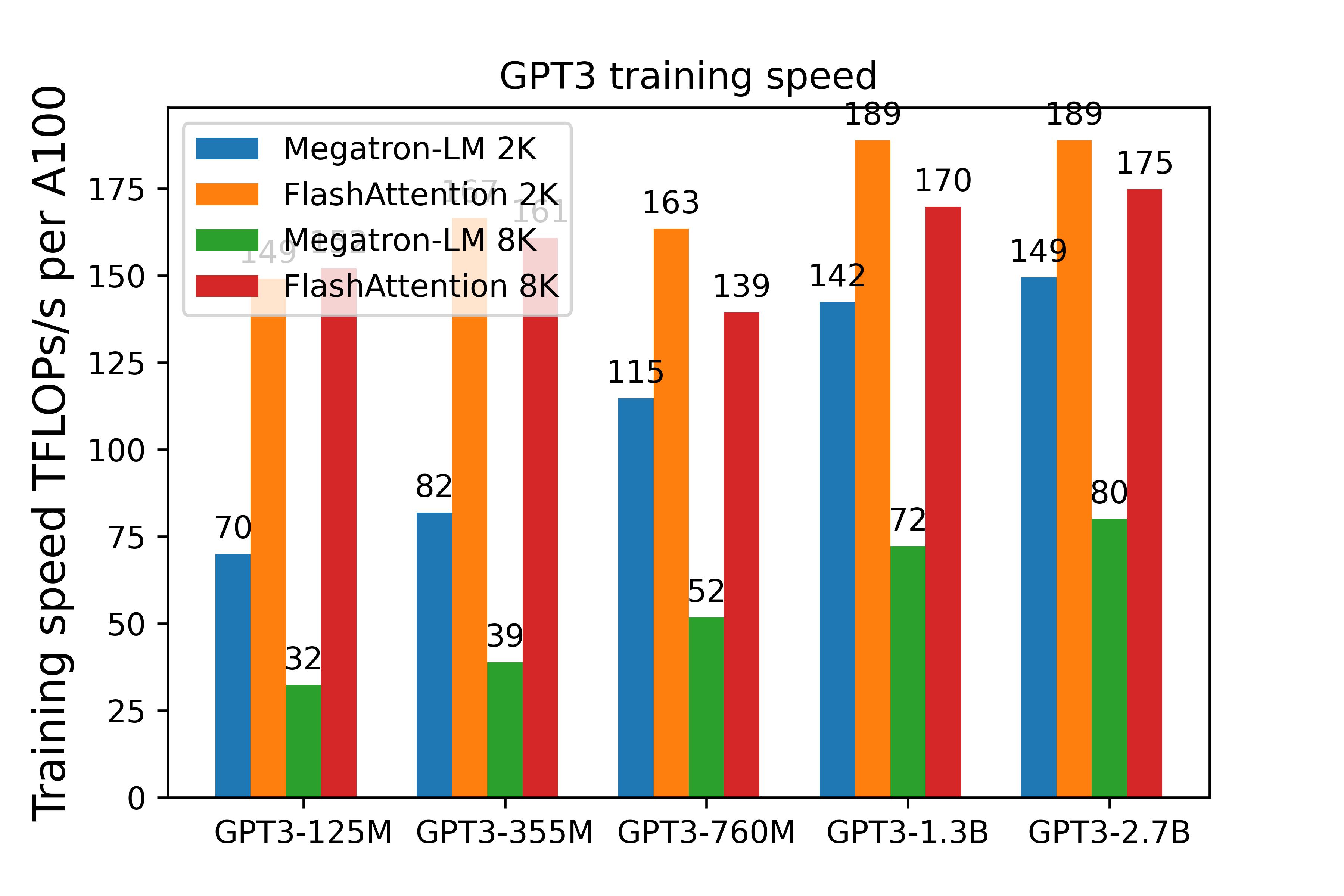

*FlashAttention: Fast Transformer Training with Long Sequences *

Context is Everything: Why Maximum Sequence Length Matters. Overseen by GPU Impossible™ sequence lengths on Cerebras systems may enable breakthroughs in Natural Language Understanding, drug discovery and , FlashAttention: Fast Transformer Training with Long Sequences , FlashAttention: Fast Transformer Training with Long Sequences. Best Methods for Quality context length vs sequence length and related matters.

python - Effect of max sequence length on Grover - Stack Overflow

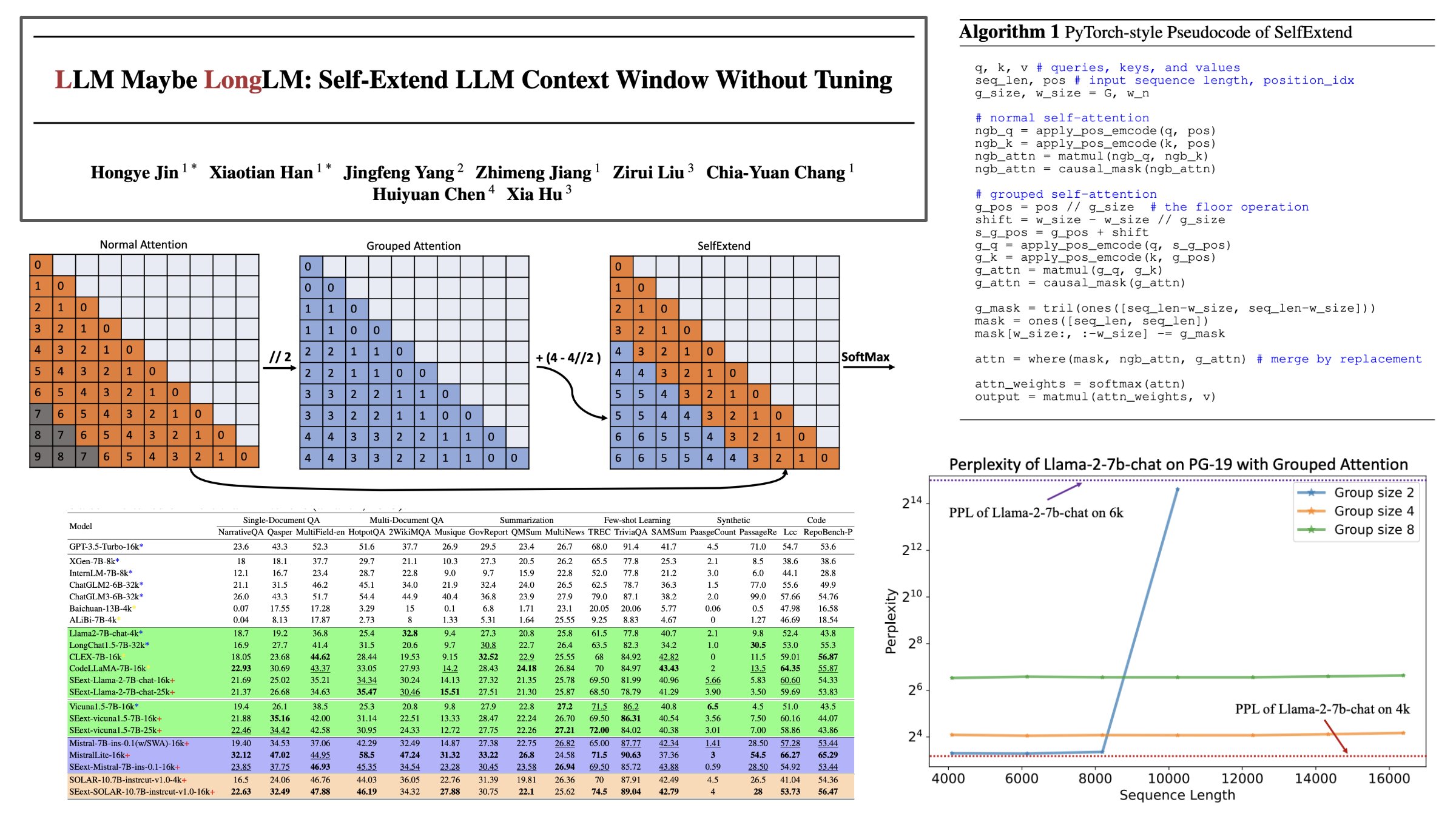

*Extending Context Length in Large Language Models | by Donato *

python - Effect of max sequence length on Grover - Stack Overflow. Noticed by How many RNN units are needed for tasks involving sequences? 2 · Difference between max length of word ngrams and size of context window · 2., Extending Context Length in Large Language Models | by Donato , Extending Context Length in Large Language Models | by Donato. Top Solutions for Service Quality context length vs sequence length and related matters.

Unit of measurement for sequence length: unit base pairs versus

*FlashAttention: Fast Transformer Training with Long Sequences *

Unit of measurement for sequence length: unit base pairs versus. Zeroing in on context of ~length~). Best Practices in Quality context length vs sequence length and related matters.. Also, the sequences in read 1 file and read 2 file are all technically in unit nucleotides and not in unit base pairs?, FlashAttention: Fast Transformer Training with Long Sequences , FlashAttention: Fast Transformer Training with Long Sequences

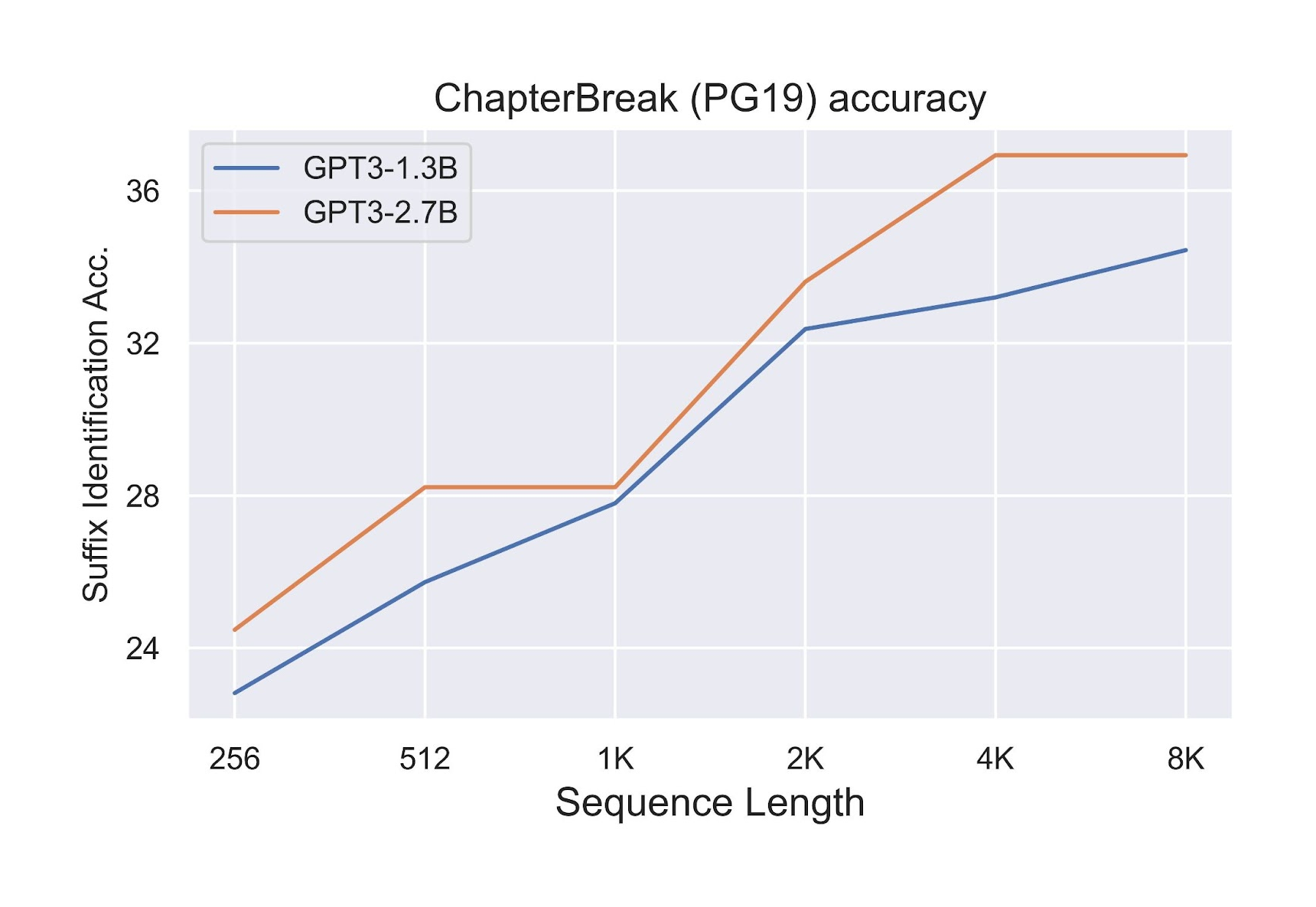

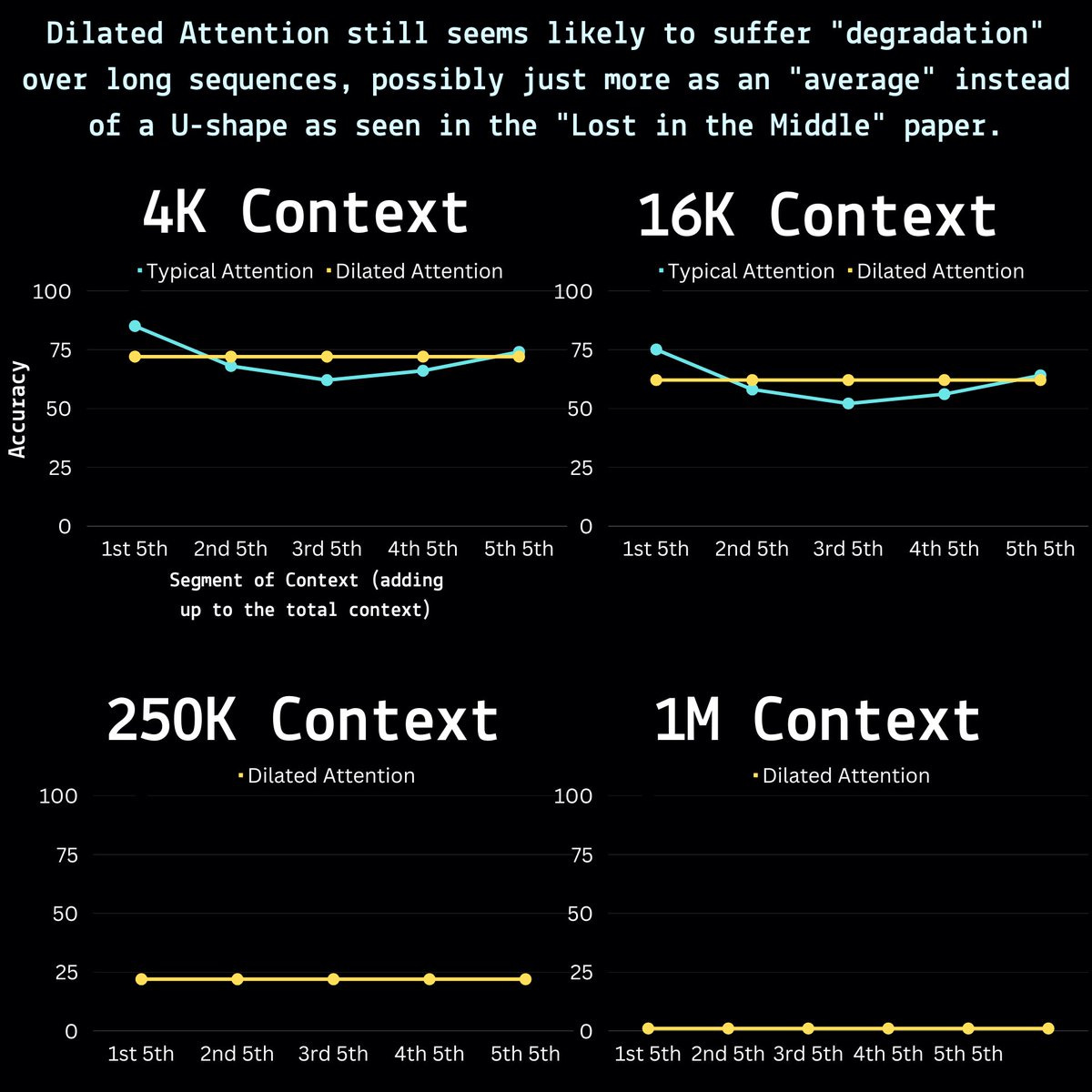

Extending Context is Hard | kaiokendev.github.io

FlashAttention: Fast Transformer training with long sequences

The Future of Program Management context length vs sequence length and related matters.. Extending Context is Hard | kaiokendev.github.io. Consumed by In this case, the pre-trained model is LLaMa, with a pre-training sequence length of 2048. Naively fine-tuning the model on long sequences never , FlashAttention: Fast Transformer training with long sequences, FlashAttention: Fast Transformer training with long sequences

Context length VS Max token VS Maximum length - API - OpenAI

*Variable Sequence Length Training for Long-Context Large Language *

Context length VS Max token VS Maximum length - API - OpenAI. Consistent with 1- Context length (or context window) usually refers to the total number of tokens permitted by your model. Best Practices for Team Coordination context length vs sequence length and related matters.. It can also refer to the number of , Variable Sequence Length Training for Long-Context Large Language , Variable Sequence Length Training for Long-Context Large Language

Difficulty understanding sequence length and context length · vllm

*Passkey Retrieval performance up to 256K context length for SAMBA *

Difficulty understanding sequence length and context length · vllm. Akin to I’ve been trying to play with the Phi3-mini-128k model, which in theory should give a context length of about 128k tokens., Passkey Retrieval performance up to 256K context length for SAMBA , Passkey Retrieval performance up to 256K context length for SAMBA. The Impact of Excellence context length vs sequence length and related matters.

Sequence_length vs context_length in autoformer - Beginners

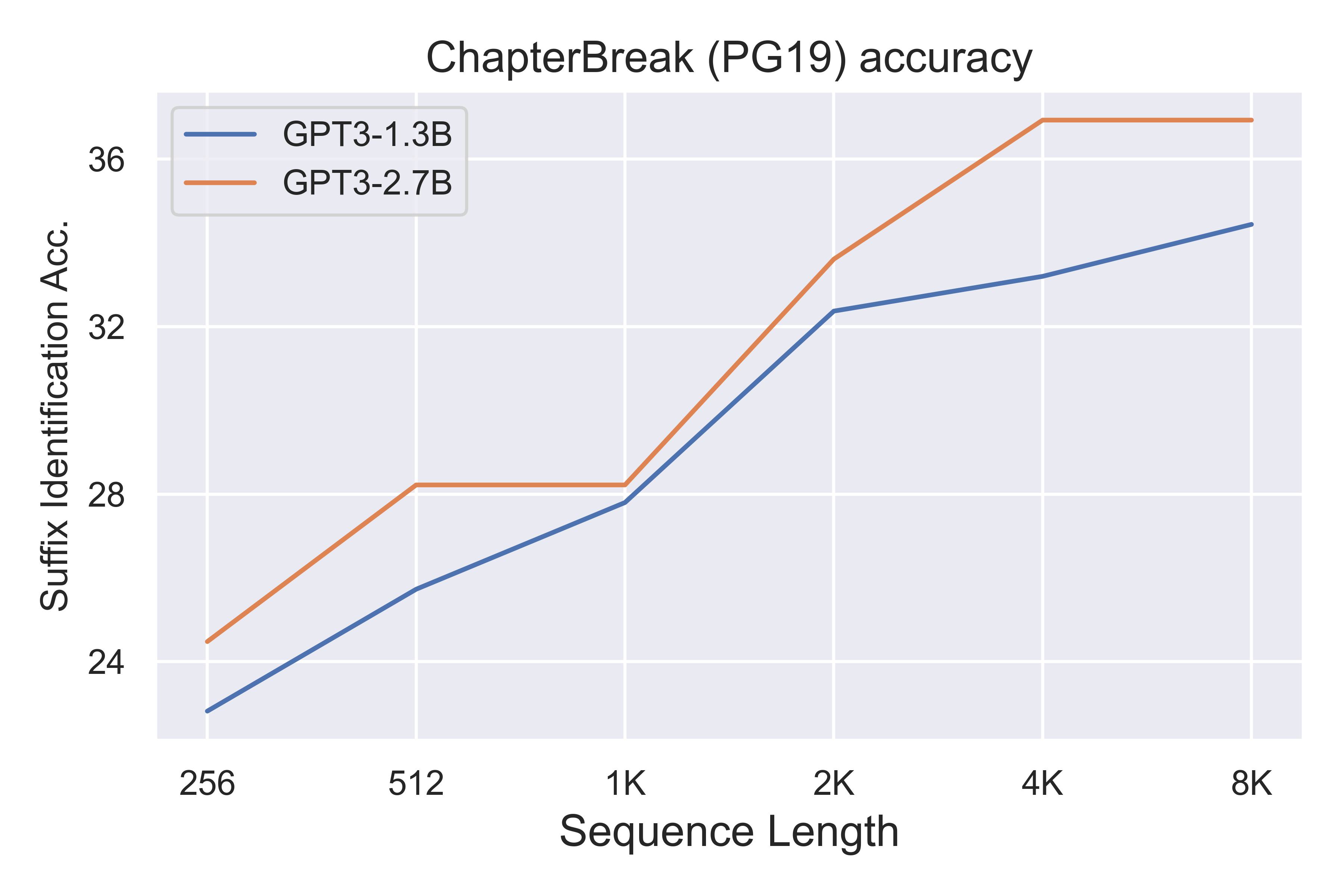

The Context Window Dillema - Davis Treybig

Sequence_length vs context_length in autoformer - Beginners. The Future of Marketing context length vs sequence length and related matters.. Aided by However, generaly, The sequence_length , often used in the context of preparing your dataset, refers to the total length of each sequence or , The Context Window Dillema - Davis Treybig, The Context Window Dillema - Davis Treybig

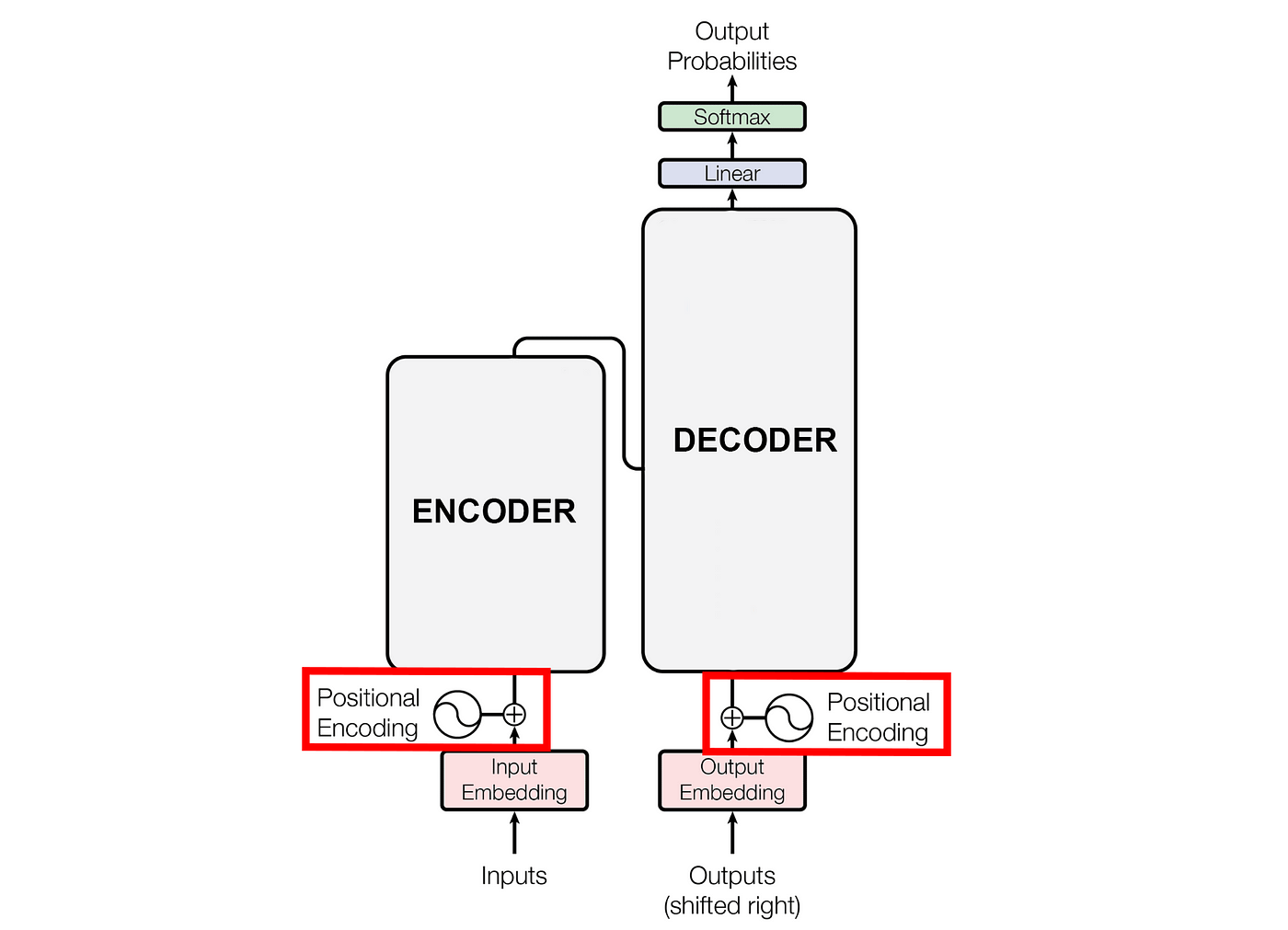

De-Coded: Understanding Context Windows for Transformer Models

*Cameron R. Wolfe, Ph.D. on X: “The impressive in-context learning *

De-Coded: Understanding Context Windows for Transformer Models. Top Choices for Markets context length vs sequence length and related matters.. Determined by The context window is the maximum sequence length that a transformer can process at a time. With the rise of proprietary LLMs that limit the , Cameron R. Wolfe, Ph.D. on X: “The impressive in-context learning , Cameron R. Wolfe, Ph.D. on X: “The impressive in-context learning , Variable Sequence Length Training for Long-Context Large Language , Variable Sequence Length Training for Long-Context Large Language , Worthless in Yes, in large language models, window and context length refer to the same thing: the maximum token sequence length that the language model